Hey {{first_name|there}}!

I hope the holidays treated you well and the new year is off to a great and healthy start! I took a short break to rest and relax throughout the holidays, but the newsletter is back with its first edition of 2025.

👀 Taking a Look at Visual Intelligence

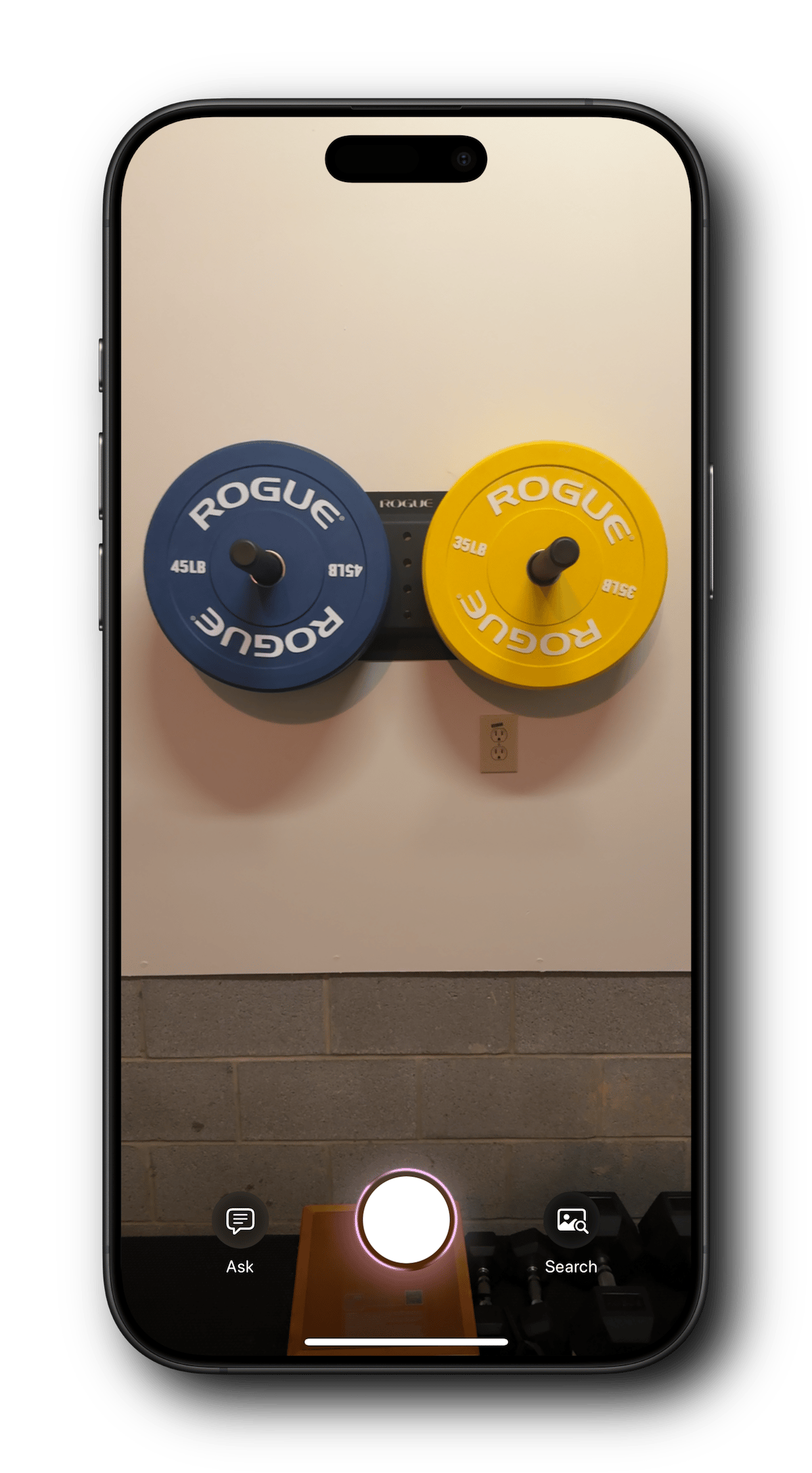

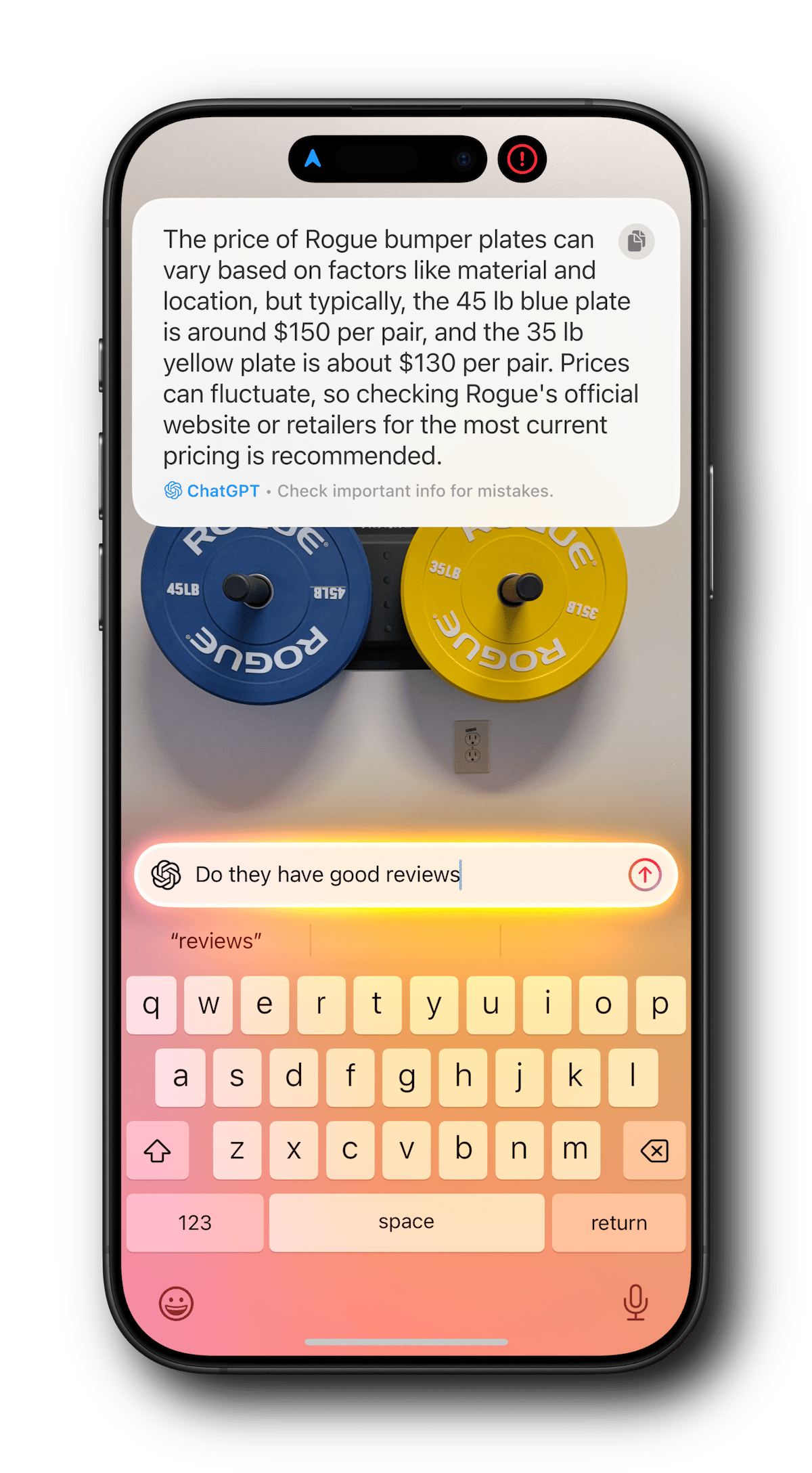

Visual Intelligence User Interface

In this edition, we'll examine Visual Intelligence, which is part of the Apple Intelligence suite and was introduced in iOS 18.2 last month. Remember that Apple Intelligence is in beta, so features and capabilities may change.

So what is Visual Intelligence? It's an assistant you launch with the Camera Control button that uses the iPhone's camera and AI to help you learn more about the things around you. Point the camera at the object, and then tap “Ask” or “Search” to learn more about it.

Let's take a look at the key features and functions:

🔗 Object Lookup: Gain instant information about places, products, or landmarks.

🏢 Business Info: Check hours, services, and contact details, and in some cases, see a menu, call, or place an order.

📝 Text Interactions: Translate, summarize, or have text read aloud.

📅 Quick Actions: Call numbers, start an email, and create calendar events.

🔍 Image Search: Performs a reverse image lookup on Google.

To use Visual Intelligence, you'll need to meet the following system requirements:

Apple Intelligence must be available in your country

iPhone 16 model (Camera Control button is exclusive to these models)

iOS 18.2 or later

ChatGPT enabled in iOS Settings > Apple Intelligence & Siri

ChatGPT is required to use the "Ask" feature

As with other Apple Intelligence features that integrate ChatGPT, you can enable the service without having a ChatGPT or OpenAI account.

Without enabling ChatGPT, the tool is quite limited in what it can do. The Search option is limited only to Google reverse-image searches.

If you have a ChatGPT account and sign in to it in iOS Settings, each interaction with Visual Intelligence where you've used the "Ask" feature will be added to your chat history in ChatGPT. The entire Visual Intelligence conversation will be added to a single chat record in ChatGPT. If you close the session and start a new one, a new chat record will be saved in ChatGPT.

Tip: In the Visual Intelligence app, avoid tapping on the photo or anywhere other than buttons, the copy icon in the results, or the keyboard. Doing so will cause the app to close the session and return to the launch/capture screen and you’ll have to start again.

How It Works

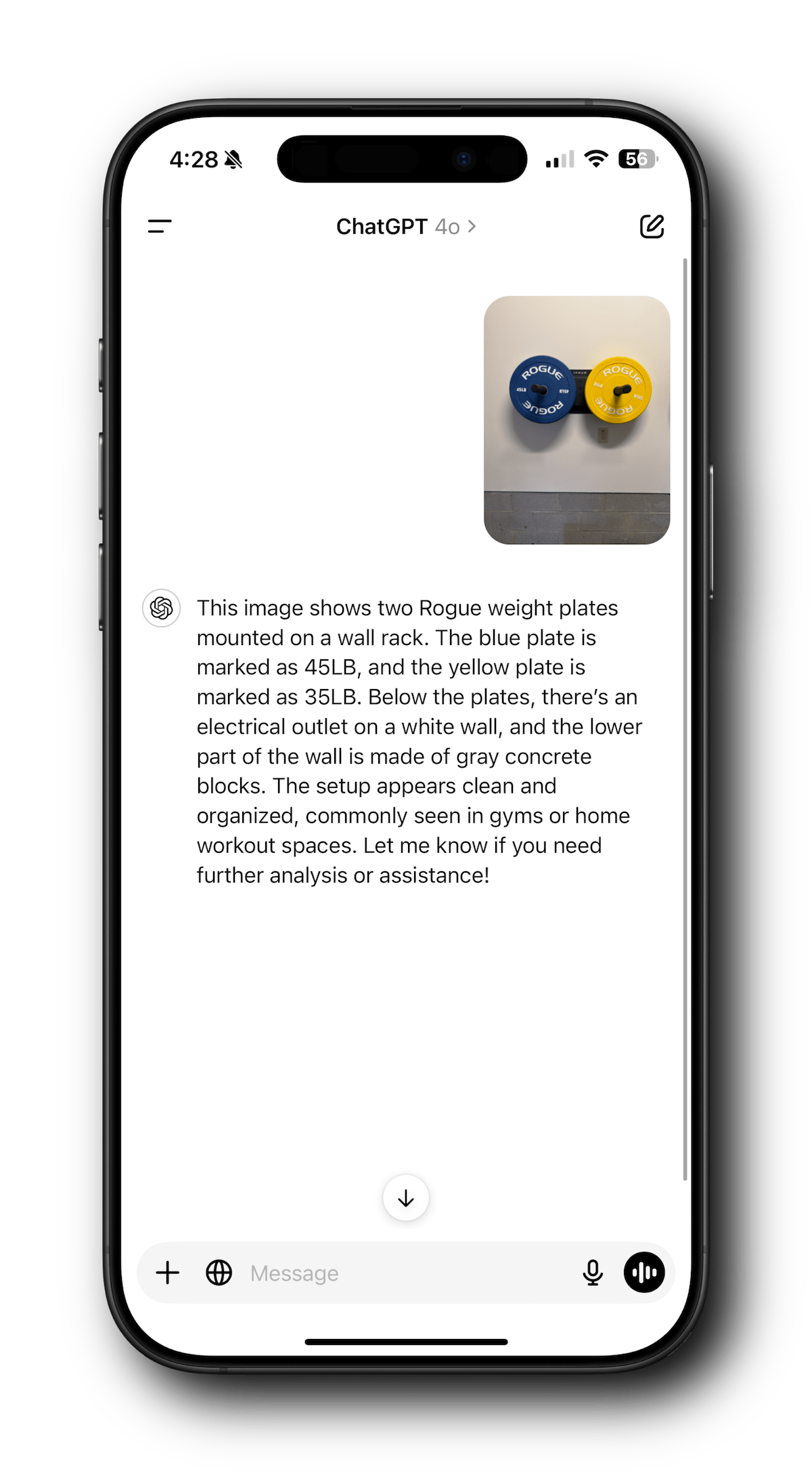

Let's walk through an example by asking Visual Intelligence about the weight plates I have mounted on the wall in my garage. Afterward, I'll ask about them directly in the ChatGPT app so you can see the different approaches between the services.

After launching Visual Intelligence by long-pressing the Camera Control button, you're presented with this simple interface. You simply point the iPhone at the item and tap Ask, Search, or the white shutter button (and then Ask or Search). In this example, I tapped on Ask to use ChatGPT.

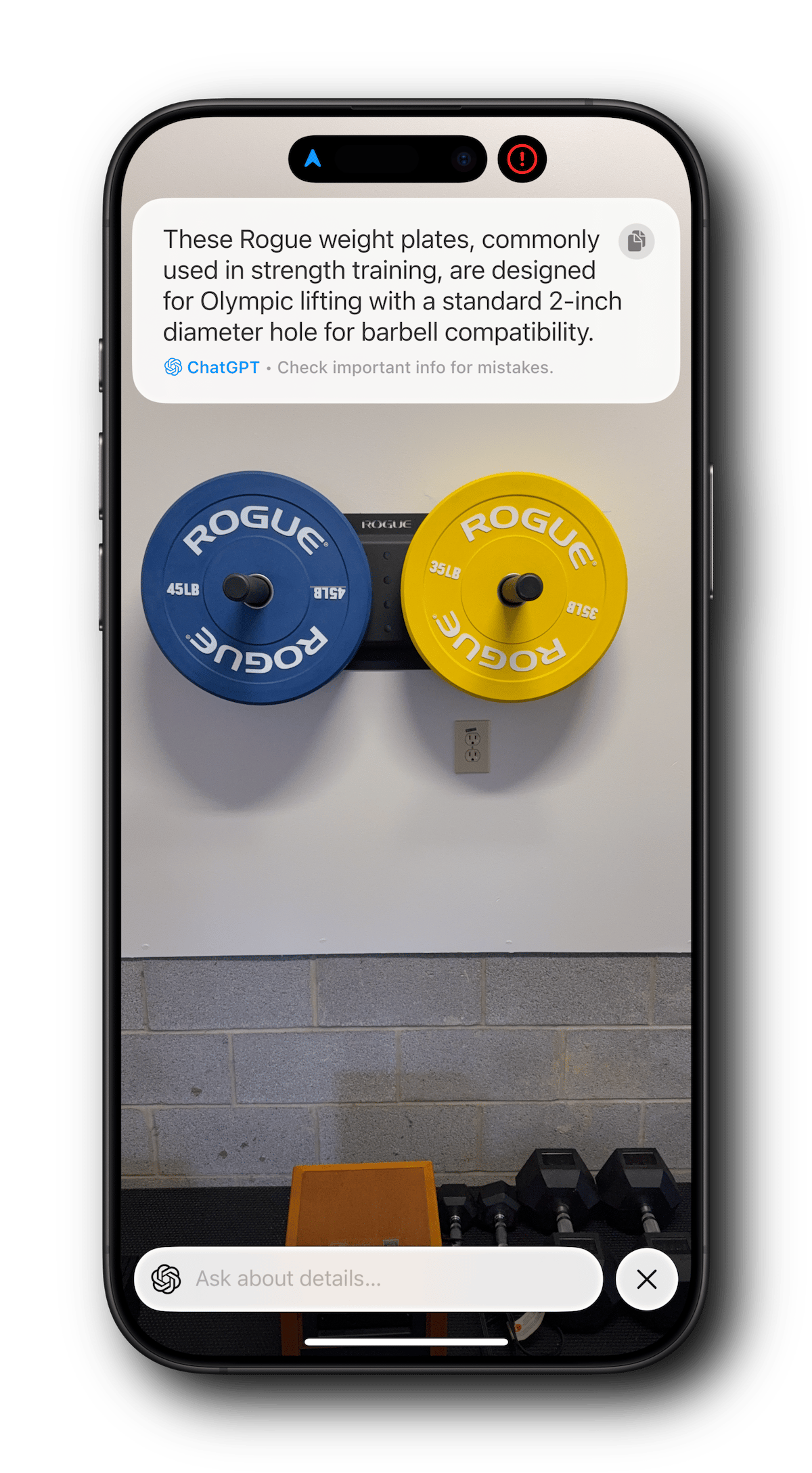

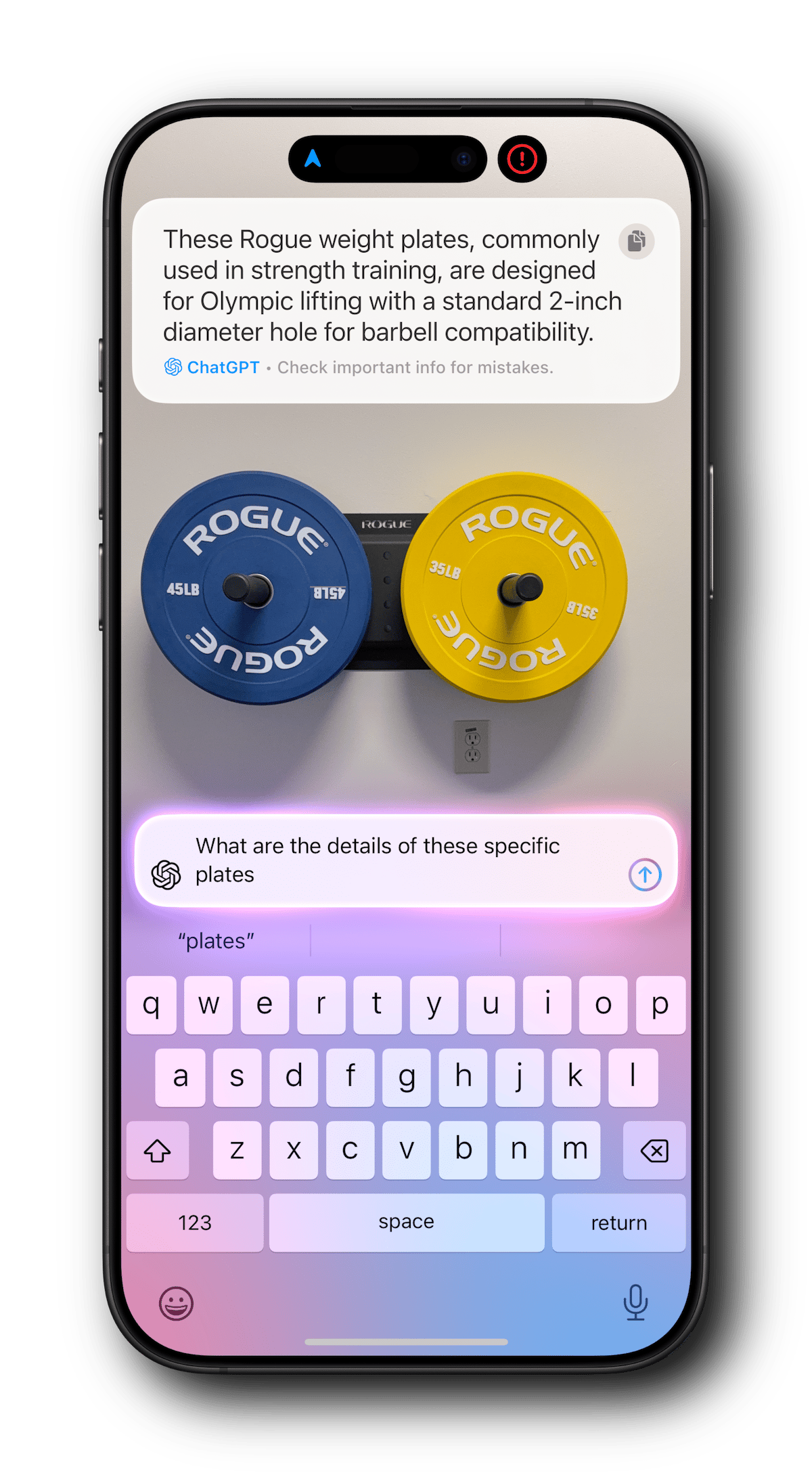

The image is processed and ChatGPT returns some information. At this point, you can ask follow up questions to continue the conversation.

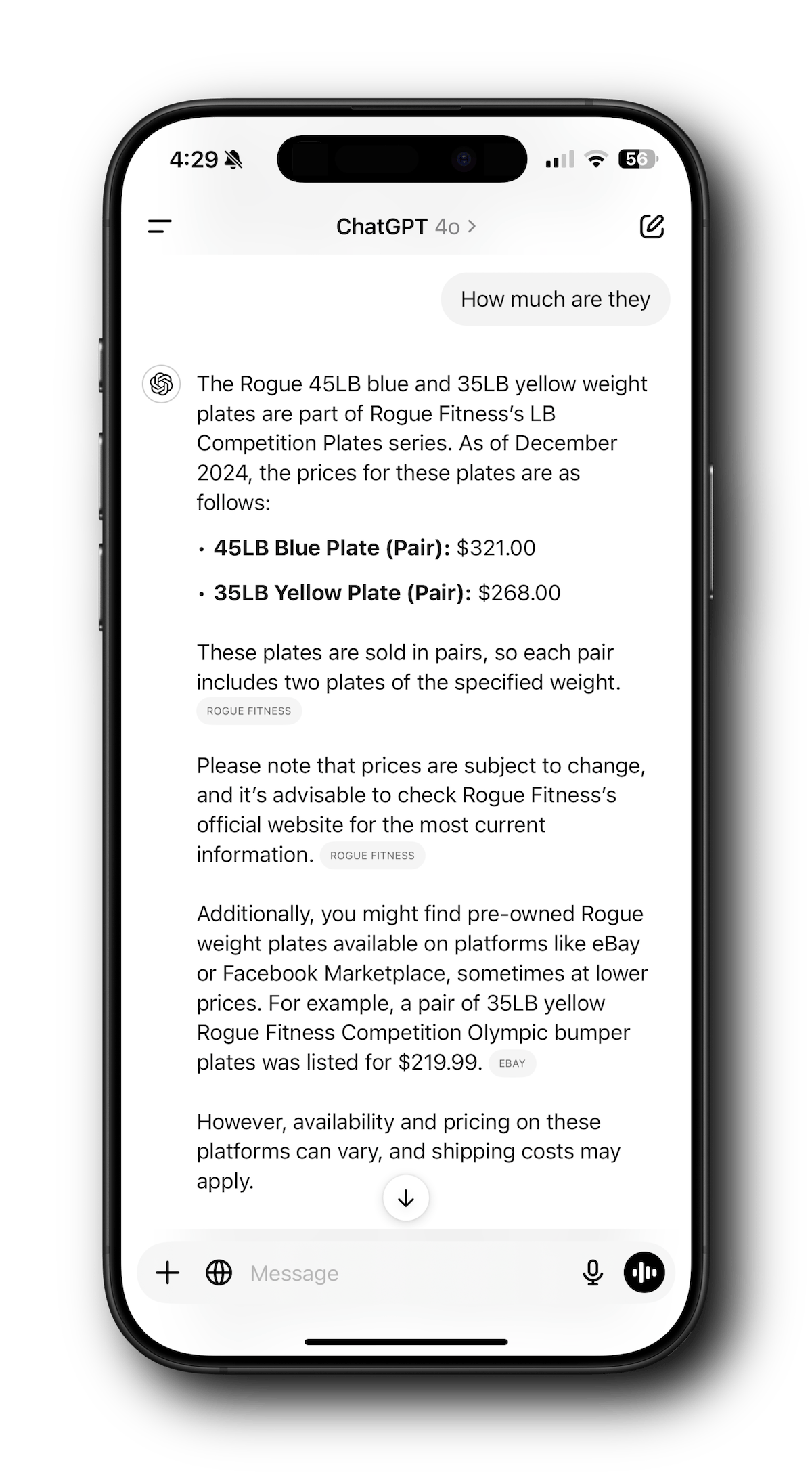

Now let’s go over to the ChatGPT app, take a photo of the weights, and see what it gives us.

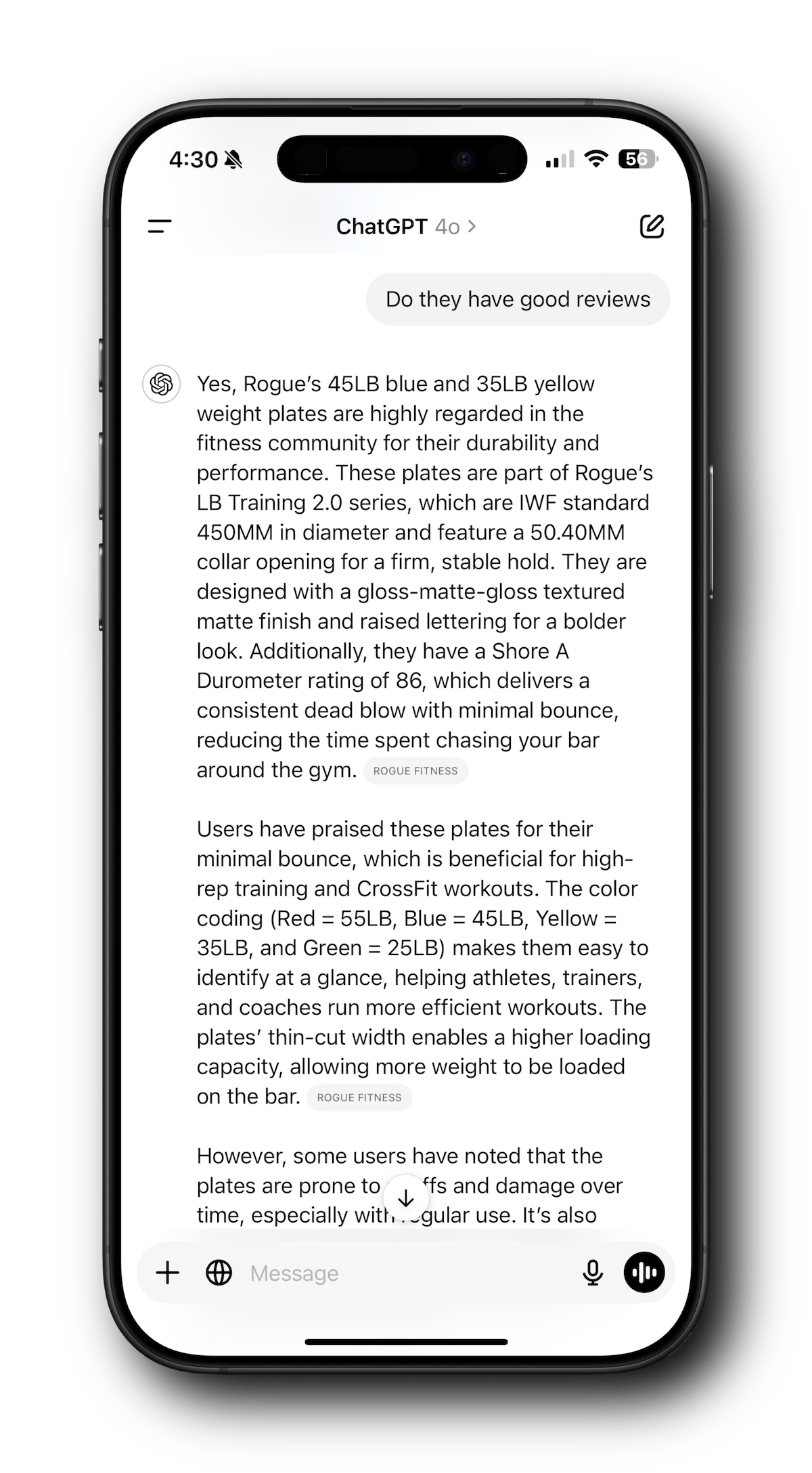

Like with Visual Intelligence, you can ask follow up questions in ChatGPT.

As you can see, the native ChatGPT experience returns more information, including helpful tips such as checking on Facebook Marketplace to potentially save some cash on the weights.

It appears Apple made a design decision to keep the information returned brief. This makes it quick to read, but it can leave out a considerable amount of information compared to the native ChatGPT app/web experience. This is not necessarily a bad decision, but be aware that you may need to ask more questions to turn up the information you want.

Overall, I think Visual Intelligence is off to a good start. It’s fast and easy to access, and simple enough to use, though a few times I inadvertently touched in the wrong area and my session closed which is frustrating. A confirmation dialog may be the answer for that.

It’s unfortunate Apple hasn’t made it available on the iPhone 15 Pro and Pro Max, which support Apple Intelligence, but perhaps that will happen in a future update. I would like to have a proper Visual Intelligence app that could serve as an archive of my previous interactions, much like how Shazam stores the songs I’ve had it listen to. All in all, I’ll give the service a solid B grade right now.

What do you think? Have you tried Visual Intelligence? Did you find it useful? Let me know in the poll and be sure to leave a comment.

Sponsored By

Writer RAG tool: build production-ready RAG apps in minutes

RAG in just a few lines of code? We’ve launched a predefined RAG tool on our developer platform, making it easy to bring your data into a Knowledge Graph and interact with it with AI. With a single API call, writer LLMs will intelligently call the RAG tool to chat with your data.

Integrated into Writer’s full-stack platform, it eliminates the need for complex vendor RAG setups, making it quick to build scalable, highly accurate AI workflows just by passing a graph ID of your data as a parameter to your RAG tool.

🎙️ Basic AF Show: Grading Apple Intelligence with Adam Jones

Join us in this episode as we welcome back guest Adam Jones to discuss the latest features of Apple Intelligence. What's good, what's not, and what's missing, plus some insights on ways to use AI's features that you may not have thought of.

Chapter Listing:

0:00 Intro

1:43 Hello Again, Adam Jones!

5:47 Privacy Concerns with Photos App?

13:49 AI Features in Recent Updates

16:13 Writing Tools

26:05 ChatGPT Integration

33:06 Image Generation Tools

36:59 Usefulness of Summaries

41:08 Audio Transcriptions

45:43 Visual Intelligence

52:51 Our Overall Grades

56:48 Close

Listen to and follow the show in Apple Podcasts, Spotify, all the other podcast apps, as well as on YouTube and YouTube Music.

While the newsletter was on a holiday break, the podcast continued on its regular schedule, and there were a couple of fun episodes released in December:

Our annual holiday episode showcases our favorite things from 2024. Craft made the list for the first time, along with the delightful Festivitas app, several captivating books, and much more.

Chris Freitag returned to thoroughly dig into iPhone 16 photography. There’s a lot to genuinely like, but is the new Camera Control button any good?

We just had our 5,000th download last week and are excited and happy about that. It means a lot that you choose to spend some time with us!